![User instruction: Pick up the paper airplane.

[sep]'''

Estimated characteristics:

m: 5.0g

µ: 0.5

k: 20.0 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 30.0

initial_force = slip_threshold = 0.098

additional_closure = 2.0

additional_force_increase = 0.01

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 184.9 N/m](videos/01_plane.png)

![User instruction: Pick up the empty paper cup.

[sep]'''

Estimated characteristics:

m: 10g

µ: 0.4

k: 50.0 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 75.0

initial_force = slip_threshold = 0.025

additional_closure = 5.0

additional_force_increase = 0.025

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 389.8 N/m](videos/02_empty-cup.png)

![User instruction: Pick up the yuba (dried tofu skin).

[sep]'''

Estimated characteristics:

m: 20g

µ: 0.5

k: 200 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 25.0

initial_force = slip_threshold = 0.025

additional_closure = 2.0

additional_force_increase = 0.04

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 157.7 N/m](videos/03_yuba.png)

![User instruction: Pick up the raspberry.

[sep]'''

Estimated characteristics:

m: 5.0g

µ: 0.8

k: 50.0 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 18.0

initial_force = slip_threshold = 0.0625

additional_closure = 1.0

additional_force_increase = 0.01

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 61.0 N/m](videos/04_raspberry.png)

![User instruction: Pick up the hard-shell tortilla.

[sep]'''

Estimated characteristics:

m: 50g

µ: 0.5

k: 1000 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 65.0

initial_force = slip_threshold = 0.98

additional_closure = 2.0

additional_force_increase = 0.2

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 188.4 N/m](videos/05_taco.png)

![User instruction: Pick up the mandarin.

[sep]'''

Estimated characteristics:

m: 150g

µ: 0.8

k: 500 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 50.0

initial_force = slip_threshold = 1.88

additional_closure = 2.0

additional_force_increase = 0.1

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 151.8 N/m](videos/06_mandarin.png)

DG policy shown within code block.![User instruction: Pick up the tail of the stuffed animal.

[sep]'''

Estimated characteristics:

m: 50g

µ: 0.8

k: 100 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 25.0

initial_force = slip_threshold = 0.61

additional_closure = 2.0

additional_force_increase = 0.05

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 192.8 N/m](videos/07_stuffed_animal.png)

![User instruction: Pick up a paper cup filled with water.

[sep]'''

Estimated characteristics:

m: 250g

µ: 0.6

k: 200 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 75.0

initial_force = slip_threshold = 4.08

additional_closure = 2.0

additional_force_increase = 0.4

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 373.2 N/m](videos/08_water_cup.png)

![User instruction: Pick up a plastic bag containing noodles.

[sep]'''

Estimated characteristics:

m: 500g

µ: 0.4

k: 300 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 90.0

initial_force = slip_threshold = 12.3

additional_closure = 5.0

additional_force_increase = 0.15

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 4324. N/m](videos/09_noodles.png)

![User instruction: Pick up the avocado.

[sep]'''

Estimated characteristics:

m: 200g

µ: 0.5

k: 500 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 60.0

initial_force = slip_threshold = 3.92

additional_closure = 2.0

additional_force_increase = 1.0

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 298.7 N/m](videos/10_avocado.png)

![User instruction: Pick up a plastic bottle containing water.

[sep]'''

Estimated characteristics:

m: 500g

µ: 0.4

k: 150 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 48.0

initial_force = slip_threshold = 12.2

additional_closure = 2.0

additional_force_increase = 0.03

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 1383. N/m](videos/11_spray.png)

![User instruction: Pick up a plastic bag containing rice.

[sep]'''

Estimated characteristics:

m: 1000g

µ: 0.5

k: 200 N/m

'''

from magpie.gripper import Gripper

G = Gripper()

G.reset_parameters()

goal_aperture = 80.0

initial_force = slip_threshold = 19.6

additional_closure = 5.0

additional_force_increase = 1.0

k = G.grasp(goal_aperture, slip_threshold, additional_closure, additional_force_increase)

print(k)

## k: 6192. N/m](videos/12_rice.png)

Abstract

Large language models (LLMs) can provide rich physical descriptions of most worldly objects, allowing robots to achieve more informed and capable grasping. We leverage LLMs' common sense physical reasoning and code-writing abilities to infer an object's physical characteristics—mass m, friction coefficient µ, and spring constant k—from a semantic description, and then translate those characteristics into an executable adaptive grasp policy. Using a two-finger gripper with a built-in depth camera that can control its torque by limiting motor current, we demonstrate that LLM-parameterized but first-principles grasp policies outperform both traditional adaptive grasp policies and direct LLM-as-code policies on a custom benchmark of 12 delicate and deformable items including food, produce, toys, and other everyday items, spanning two orders of magnitude in mass and required pick-up force. We then improve property estimation and grasp performance on variable size objects with model finetuning on property-based comparisons and eliciting such comparisons via chain-of-thought prompting. We also demonstrate how compliance feedback from DeliGrasp policies can aid in downstream tasks such as measuring produce ripeness.

Approach Overview

Large language models (LLMs) are able to supervise robot

control in manipulation across high-level step-by-step

task planning, low-level motion planning, and determining

grasp positions conditioned on a given object's

semantic properties. These methods inherently assume that the acts of “picking”

and “placing” are straightforward tasks, and cannot acount for

contact-rich manipulation tasks, such as

grasping a paper airplane, deformable plastic

bags containing dry noodles, or ripe produce.

In this work, we propose DeliGrasp, which leverages LLMs' .

common-sense physical reasoning

and code-writing abilities to infer the physical characteristics of gripper-object interactions,

including mass, spring constant, and friction, to obtain grasp policies

for these kinds of delicate and deformable objects. We formulate an adaptive grasp controller

with slip detection derived from the inferred characteristics,

endowing LLMs embodied with any current-controllable

gripper with adaptive, open-world grasp skills for objects

spanning a range of weight, size, fragility, and compliance.

Adaptive Grasp Policies

The minimum grasp force required to pick an object up is bounded between object slip acceleration and gripper upwards acceleration (2.5 m/s2 for the UR5 robot arm), given an object's mass, m, and friction coefficient, µ.

We task an LLM (GPT-4) with predicting these quantities for an arbitrary object. To generate grasp policies, we leverage a dual-prompt structure similar to that of Language to Rewards, with an initial grasp “descriptor” prompt which estimates object characteristics and special accommodations, if needed, from the input object description. The “descriptor” prompt produces a structured description, which the subsequent “coder” prompt translates into an executable Python grasp policy that modulates gripper compliance, force, and aperture according to the controller described above.

Results

We test DeliGrasp on a UR5 robot

arm and a MAGPIE gripper looking top-down on a table

with a palm-integrated Intel RealSense D-405 camera against

a dataset of 12 delicate and deformable objects.

We compare our method against 5 baselines:

1) in-place adaptive grasping with 2N and 10N grasp force thresholds,

2) in-motion adaptive grasping with 0.5N and 1.5N initial force,

3) a direct estimation baseline

in which DeliGrasp directly estimates the parameters of the adaptive grasping algorithm, contact force, force gain, and aperture gain,

4) a perception-informed baseline, where the gripper closes to an visually-determined object device-width, and

5) a traditional force-limited baseline, where the gripper closes until it cannot output any more force (set to 4 N).

We also include two additional DeliGrasp configurations: 1) with

PhysObjects finetuning and 2) with PhysObjects finetuning and chain-of-thought prompting.

As shown below, DeliGrasp performs equivalently or better than the baselines

on 10/12 objects and outperforms the baselines outright on 5/12 objects.

Where force-limited grasps deform objects, and visually-informed grasps slip, DeliGrasp

is successfully picks up objects with minimal deformation. While the direct estimation baseline is closer in performance,

we observe higher volatility in estimated parameters, due to a lack

of common-sense physical grounding, and thus, lower reliability and interpretability.

While the traditional adaptive grasping methods are more successful, particularly the "In Motion"

strategy, traditional methods are not as versatile as DeliGrasp

and are further dependent on their expert-tuned parameters for performance.

"In Motion" and "In Place" strategies with lower force settings are more successful

on lighter objects and the strategies with higher force settings are more successful

on the heavier objects. And all traditional methods crush highly delicate and light

objects like the raspberry. We conclude that it is the LLM's common

sense reasoning that enables such a dynamic grasping range in object mass and stiffness.

DeliGrasp failures are primarily slip failures, as the simple controller is not robust to non-linearly and/or highly compliant objects such

as the bag of noodles, bag of rice, spray bottle, and stuffed animal. Deforming failures did not occur, despite occasional mass overestimations,

because the applied force goalpoint, Fmin, is set to the estimated object's slip force rather than its maximum force. We show some of these failures below.

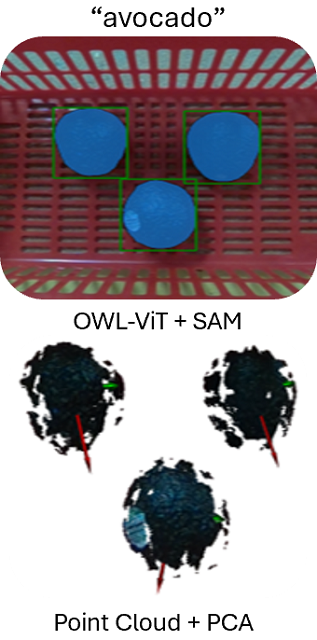

We also show how the compliance feedback from DeliGrasp policies enables downstream tasks such as measuring produce ripeness. In the below sequence, we show how DeliGrasp performs semantic segmentation on a bin of avocados, generates different grasp policies for different actions "checking for ripeness" vs. "picking up", and uses the measured compliance to pick an appropriate avocado for "guacamole today".